Research

State Engine

Media Choreography with the SC State Engine

Responsive media environments are made up of a variety of separate media instrument outputs, such as theatrical lighting, video projection, ambisonic sound, and physical actuators. In addition, a number of different sensory modalities are available as inputs to control these instruments, such as video cameras, inertial measurement units, microphones, proximity sensors, and environmental sensors. Traditionally, the logic that maps these inputs to the desired feedback is hard-coded by a media programmer. As these environments become more complex, these mappings can become very complicated and difficult to adapt to new behaviors. In larger projects, many instrument designers may be collaborating, and it can be difficult to design the evolution of an entire media environment.

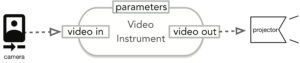

An example media instrument: sensor data from a camera is interpreted by the instrument to provide specific output to a video projector.

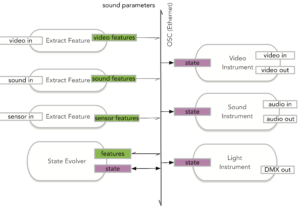

With the state engine, instrument behaviors can be controlled by their state, separate from the logic that extracts information from the sensors, so that the state engine can define the mappings between the extracted features and the states of the instruments.

Example state topologies: several different state engines can run concurrently, each mapping to different instruments or different aspects of a single instrument.

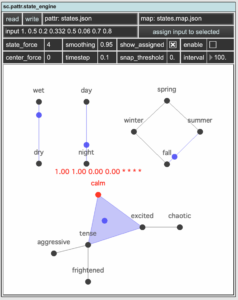

To solve this problem, the SC toolkit contains a dynamical State Engine that can be used to automatically map sensor inputs to pre-defined states of media instruments. Instrument designers can define preset behaviors for their instruments that map on to metaphorical states. Designers of the entire responsive environment can then define a state mapping that determines what states the environment can be in and how they transition between each other according to sensor input.

For example, in a linear choreography, the states could be acts of a performance, or in a non-linear choreography, they could be moods or other high-level states that are defined uniquely for each instrument.

Documentation

Team

Brandon Mechtley: Lead Researcher | Synthesis

References:

Sha X. W., M. Fortin, N. Navab, and T. Sutton, 2010. “Ozone: continuous state-based media choreography system for live performance,” in Proceedings of the 18th ACM international conference on Multimedia (pp. 1383-1392).

B. Mechtley, T. Ingalls, J. Stein, C. Rawls, and Sha X. W. 2019. “SC: A Modular Software Suite for Composing Continuously Evolving Responsive Environments” in Living Architectures Systems Group White Papers 2019. P. Beesley, S. Hastings and S. Bonnemaison, Ed. Kitchener, ON: Riverside Architectural Press, 2019, pp. 196-205.