Research

Telematic Embodied Learning

Synthesis Center leverages decades of research and techniques that allow people to freely move about, manipulate physical objects, and use their senses of touch and proprioception. As pandemic-induced shutdowns accelerate the transition to blended and distance learning, we ask: Can we design learning experiences that allow students and teachers in different locations to use the affordances of their respective physical spaces and bodily abilities (Merleau-Ponty 2013, Rajko 2017, Sheets-Johnstone 2011, 2013, 2016)?

Goals

In collaboration with experts in higher education and community stakeholders, the Synthesis Center is developing a suite of tools for collaborative, portable, mixed reality environments that free students and teachers from uninterrupted screen use and reintroduce spatial awareness and interaction wherever hybrid teaching methods are needed. Validation of this novel learning experience will require iterative, community-driven design, custom development of low-cost software and hardware, and a pedagogical analysis that leverages but also moves beyond traditional methods of assessment and engagement

This is a multidisciplinary effort that prioritizes:

- Shared experience in live settings, paying attention to spatial, corporeal, and social affordances to enable ensemble activities.

- Tools that are gesture-based, minimizing fatigue due to extensive use of screen-based technology.

- Accommodating unanticipated pedagogical practices invented by teachers (or students) for specialized needs, which may have distinct gestural idioms and techniques.

- Minimizing content development costs and user cognitive load without compromising the social aspects of learning: body language, a sense of physical presence, tangible affordances, and synchronous interaction between learners and teachers.

- Imbuing media objects (image, video, sound, text, freehand squiggle) with a tangibility that is analogous to that of physical experience.

- Easy incorporation of ad hoc bodily action and movement, and the affordances of physical surroundings and objects into pedagogical activity without requiring additional engineering or apps.

Design Workshops

The TEL research group is developing relationships with community stakeholders so as to place teachers and students front and center of R&D. We will lead iterative design sessions and gain knowledge of the pain points that teachers and students face on a daily basis, particularly as a consequence of the pandemic. We plan to develop partnerships over the course of 2020-2021 and lead workshops that follow the principles of human-centered design.

Collective Embodied Learning: Weather and Heatscapes

ASU Learning Humans, 21 July 2020

Projects

TEL studies and pilots in 2020-2021 took on a lot of salience and urgency as the Covid pandemic took hold.

Science Prep Academy

⟶ NSF ASU-NERC Partnership for Neurodiverse Computational Thinking and Telematic Embodied Learning

How can Telematic Embodied Learning approaches extend computational thinking in computer science context to foster creative and neurodiverse online school experiences?

How can Telematic Embodied Learning approaches extend computational thinking in computer science to create more robust and equitable career opportunities for students with autism? Synthesis is working with researchers from Mary Lou Fulton Teachers College Learning and the Science Prep Academy, a private middle and high school in Arizona focused on STEM education, to develop pedagogies and gestural electronic music instruments that autistic students can play together telematically connected over internet in live ensemble play sessions. Our goal is to inspire collective and embodied STEM learning via expressive and creative group activities.

Engaging Teachers and Neurodiverse Middle School Students in Tangible and Creative Computational Thinking Activities

In August 2021, in partnership with the Science Prep, faculty investigators Drs. Sha Xin Wei, Mirka Koro, Seth Thorn, and Margarita Pivovarova, the team was awarded a grant from the National Science Foundation for almost $1 million to fund the development of hybrid physical-computational platforms and social, expressive, and computational learning pathways for neurodiverse students via collective creative musical expression using wearable instruments, both in person and across the internet. (For more information, see ASU News.)

Diagrammatic

A gestural interface for students to organize, create and trace conceptual connections across multimedia learning materials, and for educators to design collaborative exercises.

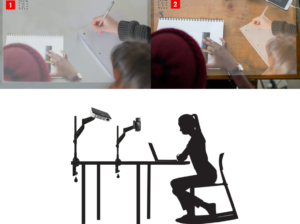

Sutured spaces for virtual classrooms

A network solution for synchronous classroom activity; this setup will enable any scenario that requires individual workspaces, which instructors can observe in real-time and either intervene in privately or share with the entire group of participants.

Media Choreography and Playful Environments

In this responsive media studio course, students with no programming background will make persuasive performances and installations using pre-built media processing software instruments and accessories. The emphasis is on experiential design, ensemble activity, and where possible augmenting the physical environment beyond screens and keyboards.

Complex Systems (Weather, Geography, Heatscapes)

Tabletop environment in which participants can jointly dip their hands and physical props in order to interact with and study a complex system, such as weather physics, heatscape, or urban infrastructure.

- Virtual Classroom: Shared tabletop.

- Complex Systems: Tabletop projection.

Technology

- Gesture tracking

- Systems chart tracing

- Augmented Reality

- Streaming multi-channel video and audio

- Custom hardware for physical interaction

- Real-time complex systems simulation (e.g. weather patterns)

- Video portals and mated tabletops

For more on technical resources, see Synthesis Center techniques.

Team

Garrett L Johnson (AME MAS PhD): Research lead, experience design

Gabriele Carotti-Sha (San Francisco): External projects coordinator, experience design

Muindi F Muindi (U Washington, Seattle): Performative experience design

Tianhang Liu (AME Digital Culture): Augmented reality, research assistant

Andrew Robinson (Synthesis, Weightless, AME Digital Culture): Realtime media, user interfaces

Ivan Mendoza (AME Digital Culture): Gesture tracking, 3D graphics

Consultants

Connor Rawls (Synthesis): Media choreography systems, network media, responsive environments

Pete Weisman (AME Technical Director): Audio-visual systems, responsive environments

Omar Faleh (Weightless, Morscad, Montreal): Augmented reality and urban spaces, architecture, interactive media.

Sha Xin Wei PhD (Synthesis, AME): Director, experience design, external projects

Collaborators

Tim Wells: Faculty Associate, Curriculum, Pedagogy, & Methodology | Mary Lou Fulton Teachers College, ASU

Mirka Koro, Professor, Qualitative research | Mary Lou Fulton Teachers College, ASU

Ananí M. Vasquez, PhD Learning, Literacies and Technologies | Mary Lou Fulton Teachers College, ASU